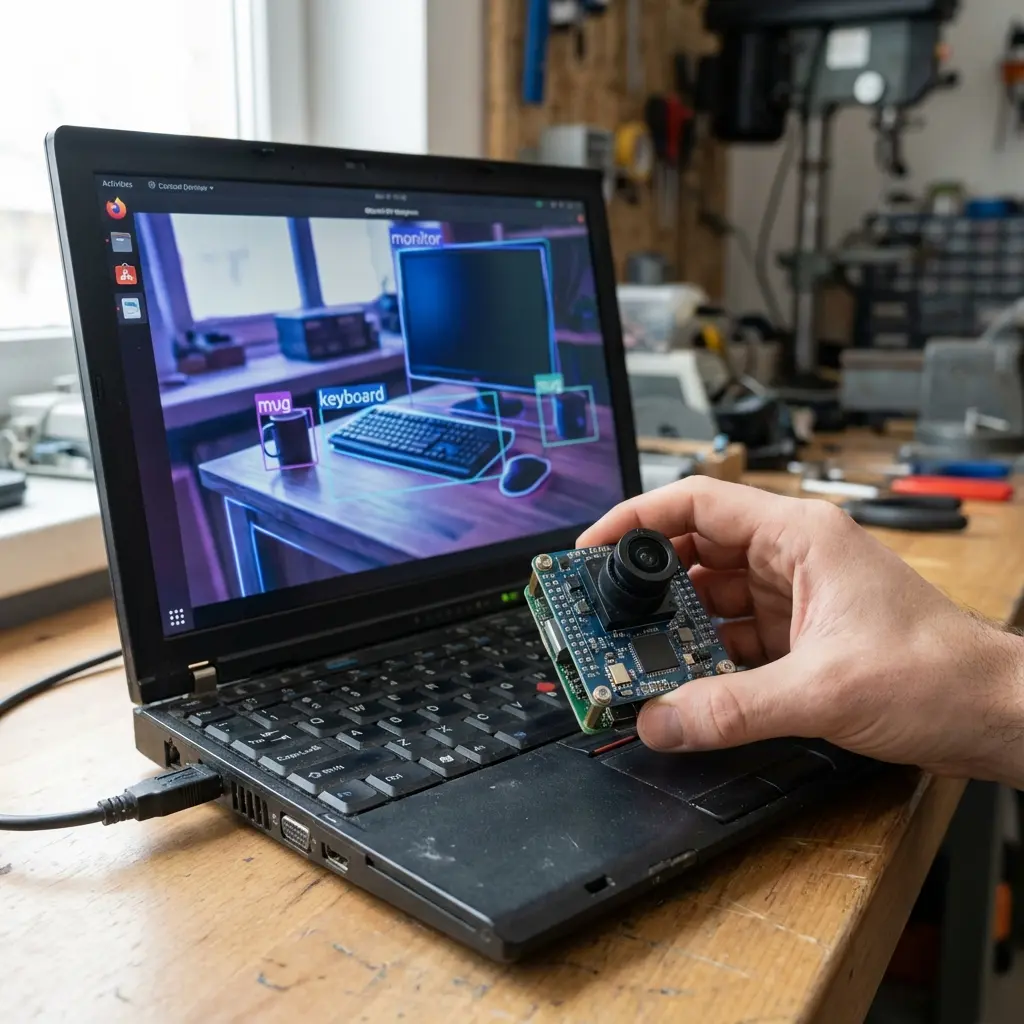

Moondream—a vision-language model that weighs 479 megabytes compressed—runs visual intelligence on hardware you already own. No specialized GPUs. No cloud dependency. No data leaving your infrastructure.

This isn't just a technical curiosity. It's a marker of where the edge AI deployment gap is closing, and what that means for organizations still treating visual intelligence as something you rent rather than own.

The Compression Breakthrough Nobody's Talking About

Here's what changed: model compression techniques now reduce AI models by 80-95% while degrading accuracy by less than 2-3%.

That's not incremental improvement. That's the difference between impossible and deployed.

A Llama 70B parameter model that required four NVIDIA A100 GPUs now runs on a single A100 after quantization. The math changes completely. The ownership economics shift from "only enterprises with massive budgets" to "organizations with existing hardware."

Moondream takes this further. At 0.5 billion parameters, quantized to 8-bit, it requires 996 MiB of memory to run. You can deploy sophisticated visual intelligence on laptops, edge devices, even resource-constrained hardware that's been sitting in your infrastructure for years.

The barrier wasn't capability. It was awareness.

What Edge Deployment Actually Means

Let's be specific about what happens when visual AI runs locally.

Data never leaves your device. Not "we encrypt it in transit." Not "we have strong privacy policies." It literally doesn't transmit. The surface area for exposure doesn't exist.

Latency drops to hardware speed. No network round-trips. No API rate limits. No waiting for cloud inference queues. Processing happens at the speed of your local compute.

Cost structure inverts. Instead of paying per API call forever, you pay once for hardware you own. The model becomes an asset on your balance sheet, not an operating expense that compounds with usage.

Clément Delangue, CEO of Hugging Face, asked the question that matters: "Everyone is talking about how we need more AI data centers... why is no one talking about on-device AI?"

His answer: "Running AI on your device: Free, Faster & more energy efficient, 100% privacy and control."

That's not marketing. That's architecture.

The Deployment Gap We're Not Discussing

Interest in edge AI is high. Actual deployment? Different story.

Independent surveys show fewer than one-third of organizations have fully deployed edge AI today. The gap between "we're interested" and "we've implemented" remains wide.

Why?

The convenience bias is real. Cloud APIs are frictionless to start. Swipe a credit card, call an endpoint, get results. Local deployment requires hardware decisions, model selection, integration work. The path of least resistance points toward dependency.

The awareness gap persists. Decision-makers don't realize local infrastructure can match cloud performance now. They remember edge AI from three years ago—limited models, constrained capabilities, significant trade-offs. That world doesn't exist anymore.

The ownership mindset hasn't shifted. Organizations still think in terms of "which tool should we subscribe to" rather than "what infrastructure should we own." They optimize for short-term convenience over long-term asset accumulation.

Moondream matters because it makes the gap visible. When sophisticated visual intelligence runs on a laptop, the "you need massive infrastructure" argument collapses.

What Changes When You Own the Intelligence

Let's walk through what ownership actually means.

Your visual intelligence becomes a sellable business asset. When you sell your company, the AI infrastructure transfers with it. The buyer gets the models, the training, the integration, the accumulated intelligence. That has valuation implications cloud subscriptions don't.

Your competitive intelligence stays competitive. Every image you process through a cloud API potentially trains someone else's model. Every visual analysis you run feeds into a system your competitors might access tomorrow. Local processing means your data accumulation benefits you exclusively.

Your scaling economics shift. Cloud costs compound with usage. Process more images, pay more forever. Local infrastructure has upfront costs, then marginal processing approaches zero. The crossover point arrives faster than most organizations realize.

Your operational resilience improves. Network outages don't stop processing. API changes don't break workflows. Vendor decisions don't dictate your capabilities. You control the infrastructure, so you control the outcomes.

This isn't theoretical. In EDB's global research, 95% of senior executives said building their own sovereign AI and data platform will be mission-critical within three years.

They're not talking about compliance checkboxes. They're talking about strategic infrastructure that determines competitive positioning.

The Architecture Question Nobody Asks

Here's what we see when we audit organizations considering visual AI:

They start by researching cloud vision APIs. They compare pricing. They evaluate features. They read documentation about what each service can do.

They never ask: "What hardware do we already own that could run this locally?"

That question changes everything. Because most organizations have compute capacity sitting idle. Servers running at 30% utilization. Workstations with spare GPU cycles. Edge devices with processing headroom.

The infrastructure exists. The awareness doesn't.

Moondream runs on hardware you probably already have. The question isn't "can we afford new infrastructure?" It's "can we afford to keep renting what we could own?"

What Performance Parity Actually Looks Like

The edge AI narrative used to be: "You can run limited models locally, but for real capability, you need the cloud."

That's done.

Current edge deployment delivers high-value AI experiences with a fraction of cloud cost and latency. The trade-off isn't capability anymore. It's convenience during setup versus ownership after deployment.

Yes, constraints exist. Memory limitations are real. Energy budgets matter on battery-powered devices. Computation capacity has boundaries.

But those constraints define deployment strategy, not feasibility. You optimize model selection for your hardware profile. You choose quantization levels that balance size and accuracy. You architect for the infrastructure you own rather than the infrastructure you rent.

The result? Real-time, low-latency visual processing with improved privacy, running on devices you control.

The Data Center Warning Signal

Aravind Srinivas, founder and CEO of Perplexity AI, issued a warning that matters: if AI can run directly on personal devices, the world's sprawling, multi-trillion-dollar data center buildout may no longer make economic sense.

Read that again.

The CEO of a cloud AI company is saying local deployment might make cloud infrastructure obsolete.

We're not there yet. Cloud still dominates for training large models, handling massive scale, serving global user bases. But the direction is clear: edge deployment is becoming the strategic choice, not the compromise option.

Organizations investing in cloud-only strategies are betting that centralized infrastructure remains economically superior. Organizations building local capabilities are betting that ownership beats rental when performance reaches parity.

Moondream suggests the parity point has arrived for visual intelligence.

What This Means for Your Infrastructure Decisions

You have a choice to make about visual AI.

Option one: Subscribe to a cloud vision API. Swipe the credit card. Start processing images today. Pay per call forever. Feed your visual data into someone else's training pipeline. Build dependency into your operational architecture.

Option two: Deploy local visual intelligence. Invest time in setup. Own the infrastructure. Process unlimited images at marginal cost. Keep your visual data within your boundaries. Build an asset that increases your company's valuation.

The first option is easier today. The second option is valuable tomorrow.

Most organizations choose convenience. They optimize for speed to implementation over long-term ownership. They treat AI as an expense line rather than an asset category.

Then they wake up three years later with massive cloud bills, vendor lock-in, and zero transferable infrastructure when it's time to sell.

Moondream makes the alternative visible. Visual intelligence that fits in 479 megabytes. Runs on existing hardware. Processes locally. Transfers with your business.

The Ownership Question

We keep coming back to the same pattern: organizations unknowingly choose dependency when ownership is available.

Not because ownership is impossible. Because the option isn't visible.

Cloud providers don't advertise local alternatives. Tool vendors don't highlight that you could own the infrastructure instead of renting it. The entire market incentive structure pushes toward subscription models that benefit vendors, not asset accumulation that benefits you.

Moondream breaks that pattern by existing. By running on laptops. By fitting in less than half a gigabyte compressed. By proving that sophisticated visual AI doesn't require cloud dependency.

The question isn't whether edge AI works. It does.

The question is whether you'll keep renting intelligence you could own.

Your infrastructure decisions today determine your competitive position tomorrow. Your data sovereignty choices now shape your valuation later. Your ownership strategy at this moment defines whether AI becomes an asset or remains an expense.

Moondream is a signal. The compression breakthrough happened. Performance parity arrived. Local deployment works.

What you build next is up to you.